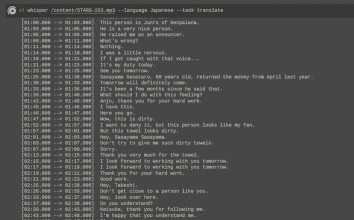

1

00:00:00,256 --> 00:00:06,400

Hello. I'm surprised to see an old man out of nowhere.

2

00:00:06,656 --> 00:00:12,800

Please take a few minutes of your time.

3

00:00:13,056 --> 00:00:19,200

This time, I'd like to share with you a little bit about myself.

4

00:00:19,456 --> 00:00:25,600

I'd like to share with you my story.

5

00:00:25,856 --> 00:00:32,000

I'm sorry I'm late to introduce myself.

6

00:00:32,256 --> 00:00:38,400

I'm a little rough around the edges, but I'm the mainstay of my family. I'm right after my father.

7

00:00:38,656 --> 00:00:44,800

As you can see, I'm not a magazine model.

8

00:00:45,056 --> 00:00:51,200

I'm a regular office worker. There's no Araike here.

9

00:00:51,456 --> 00:00:57,600

Because eight years ago, my wife left me for a young man she worked with part-time.

10

00:00:57,856 --> 00:01:04,000

She got tired of him and left him.

11

00:01:04,256 --> 00:01:05,536

Since then, I've been the mainstay of Araike for three years.

12

00:01:05,792 --> 00:01:11,680

I've been the mainstay of Araike's household, raising our three children.

13

00:01:11,936 --> 00:01:14,496

Now then...

14

00:01:15,008 --> 00:01:17,824

I would like to introduce you to my lovely children.

15

00:01:18,336 --> 00:01:24,480

First of all, my son, my eldest, is my favorite, a college student.

16

00:01:24,736 --> 00:01:30,880

He is a serious boy, and I guess he is more solid than I am.

17

00:01:31,136 --> 00:01:33,184

Lately, he's been...

18

00:01:33,440 --> 00:01:35,232

Muscle training, Yamanote...

19

00:01:35,744 --> 00:01:37,536

A typhoon is coming.

20

00:01:37,792 --> 00:01:43,936

Maybe he's got too much power.

21

00:01:44,960 --> 00:01:51,104

Next is Shinji, my second and youngest son.

22

00:01:53,920 --> 00:02:00,064

He doesn't go to school.

23

00:02:00,320 --> 00:02:06,464

Animated? He's always doing that.

24

00:02:06,720 --> 00:02:12,864

A recluse. Me too.

25

00:02:13,120 --> 00:02:17,472

I don't get to see him much.

26

00:02:17,728 --> 00:02:19,520

I don't get to see him much, so I don't know what he's thinking.

27

00:02:19,776 --> 00:02:24,640

I don't know what he's thinking.

28

00:02:25,152 --> 00:02:31,296

I'm Rima, the eldest between two sons.

29

00:02:31,552 --> 00:02:37,696

She's the problem child of Araike.

30

00:02:37,952 --> 00:02:44,096

She never wears clothes and spends most of her time at home completely naked.

31

00:02:44,352 --> 00:02:50,496

She can't even study... and she's not in school.

32

00:02:54,592 --> 00:03:00,736

She's a gal? Also, for some reason, she's always sweaty since she was a kid.

33

00:03:02,528 --> 00:03:05,344

Is she expensive?

34

00:03:05,856 --> 00:03:12,000

This time, Lima is causing all kinds of trouble.

35

00:03:12,256 --> 00:03:18,400

Let's see... naked and covered in sweat with my eldest daughter.

| 1

00:00:01,200 --> 00:00:02,400

Hi there.

2

00:00:04,110 --> 00:00:05,520

I'm sure some of you are surprised to see your uncle out of nowhere.

3

00:00:06,180 --> 00:00:08,088

I know some of you were surprised.

4

00:00:09,180 --> 00:00:10,180

It's just for a few hours.

5

00:00:10,680 --> 00:00:11,680

Please bear with me.

6

00:00:13,410 --> 00:00:14,410

This time

7

00:00:14,614 --> 00:00:15,614

I'd like to send you

8

00:00:18,090 --> 00:00:19,680

I don't know if I should say this myself, but...

9

00:00:20,401 --> 00:00:21,401

I'm a little...

10

00:00:23,250 --> 00:00:24,270

I've changed a lot.

11

00:00:25,470 --> 00:00:26,470

My family

12

00:00:27,090 --> 00:00:28,620

It's a story about a coarse house.

13

00:00:30,660 --> 00:00:31,950

I'm late to introduce myself.

14

00:00:33,090 --> 00:00:34,090

I am

15

00:00:34,140 --> 00:00:35,504

The mainstay of our coarse family.

16

00:00:36,240 --> 00:00:37,800

I'm Taku, my father.

17

00:00:38,910 --> 00:00:40,110

As you can see...

18

00:00:40,560 --> 00:00:41,730

I'm not a magazine model

19

00:00:42,270 --> 00:00:43,270

I'm not

20

00:00:45,360 --> 00:00:46,830

I'm just an ordinary office worker

21

00:00:46,830 --> 00:00:47,830

I'm an ordinary businessman.

22

00:00:48,840 --> 00:00:49,840

Washing machine

23

00:00:50,268 --> 00:00:51,268

I don't have a washing machine

24

00:00:52,321 --> 00:00:53,321

I'm not a washer.

25

00:00:53,970 --> 00:00:54,180

8 years ago

26

00:00:54,210 --> 00:00:55,290

years ago, my wife

27

00:00:55,800 --> 00:00:56,070

part time job

28

00:00:56,070 --> 00:00:58,193

I've been working with a lot of young men.

29

00:00:59,670 --> 00:01:00,300

She got tired of me.

30

00:01:00,661 --> 00:01:01,661

She got tired of me.

31

00:01:01,860 --> 00:01:02,860

She left me.

32

00:01:04,140 --> 00:01:05,250

And then I went back to

33

00:01:06,030 --> 00:01:06,750

As the mainstay

34

00:01:06,990 --> 00:01:08,100

As the mainstay

35

00:01:09,120 --> 00:01:09,210

Three...

36

00:01:09,229 --> 00:01:10,229

I've raised three children.

37

00:01:10,410 --> 00:01:11,410

I've raised three children.

38

00:01:13,530 --> 00:01:14,530

And now...

39

00:01:15,000 --> 00:01:17,730

I would like to introduce you to my lovely children.

40

00:01:18,570 --> 00:01:19,920

Let's start from there.

41

00:01:21,630 --> 00:01:22,830

My eldest son Daisuke.

42

00:01:23,340 --> 00:01:24,340

He's a college student, isn't he?

43

00:01:26,100 --> 00:01:27,630

He's very serious.

44

00:01:28,650 --> 00:01:31,200

I guess he's more solid than I am.

45

00:01:31,950 --> 00:01:33,060

What is he doing these days?

46

00:01:33,870 --> 00:01:34,348

muscle training

47

00:01:34,348 --> 00:01:35,348

She's into muscle training.

48

00:01:35,730 --> 00:01:37,440

She seems to be working out a lot.

49

00:01:38,220 --> 00:01:38,591

Maybe he has too much power

50

00:01:38,591 --> 00:01:39,990

Maybe he has too much power.

51

00:01:43,444 --> 00:01:44,640

It might be amazing.

52

00:01:47,370 --> 00:01:48,370

Next...

53

00:01:49,230 --> 00:01:50,230

Second son

54

00:01:50,640 --> 00:01:50,910

Check

55

00:01:50,910 --> 00:01:51,000

of

56

00:01:51,327 --> 00:01:52,327

Check it out!

57

00:01:53,880 --> 00:01:54,960

This boy

58

00:01:56,520 --> 00:01:57,870

He doesn't go to school.

59

00:02:02,400 --> 00:02:03,400

Anime

60

00:02:03,750 --> 00:02:03,990

Mecha

61

00:02:04,230 --> 00:02:04,500

or...

62

00:02:05,130 --> 00:02:06,540

That's all she does.

63

00:02:09,085 --> 00:02:10,085

That's nice.

64

00:02:11,550 --> 00:02:12,550

Me too.

65

00:02:13,230 --> 00:02:15,420

I don't know what he's thinking.

66

00:02:17,790 --> 00:02:19,530

I don't know what he's thinking.

67

00:02:20,220 --> 00:02:21,420

I don't know what he's thinking.

68

00:02:23,880 --> 00:02:24,880

Finally.

69

00:02:25,800 --> 00:02:26,800

The first son

70

00:02:27,180 --> 00:02:28,180

The second son

71

00:02:28,890 --> 00:02:29,890

The eldest daughter.

72

00:02:29,945 --> 00:02:30,945

Now.

73

00:02:32,790 --> 00:02:34,020

This is Koga.

74

00:02:34,650 --> 00:02:36,210

She's the problem child of the family.

75

00:02:36,990 --> 00:02:37,990

First of all...

76

00:02:38,220 --> 00:02:38,640

Clothes.

77

00:02:38,790 --> 00:02:39,790

No clothes.

78

00:02:39,960 --> 00:02:42,480

She spends most of her time at home completely naked.

79

00:02:44,400 --> 00:02:45,400

Also...

80

00:02:45,810 --> 00:02:46,810

I don't know how to say it.

81

00:02:47,430 --> 00:02:48,690

I can't even study.

82

00:02:49,500 --> 00:02:51,390

I didn't finish school.

83

00:02:53,283 --> 00:02:54,283

I'm what's called

84

00:02:54,660 --> 00:02:54,960

Gyaru

85

00:02:54,960 --> 00:02:55,960

I guess you could say

86

00:02:56,790 --> 00:02:57,840

I don't know why.

87

00:02:58,710 --> 00:03:01,200

I've come all this way since I was a kid.

88

00:03:02,550 --> 00:03:04,290

I wonder if she has hyperhidrosis.

89

00:03:06,120 --> 00:03:07,120

This time

90

00:03:07,530 --> 00:03:07,830

This

91

00:03:08,040 --> 00:03:09,040

is

92

00:03:10,800 --> 00:03:12,030

It's going to be a problem.

93

00:03:14,010 --> 00:03:15,207

Well then...

94

00:03:15,750 --> 00:03:16,890

Naked eldest daughter and

95

00:03:18,089 --> 00:03:19,089

covered in

|