Thanks, I'll give that one a go.To speed things up, I'd look into whispercpp, it should be faster without any quality loss.

Whisper and its many forms

- Thread starter SamKook

- Start date

-

Akiba-Online is sponsored by FileJoker.

FileJoker is a required filehost for all new posts and content replies in the Direct Downloads subforums.

Failure to include FileJoker links for Direct Download posts will result in deletion of your posts or worse.

For more information see this thread.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

If you're comparing VADPro with the other VADs, then yes, it's normal since it has different defaults which should provide a better result, at the price of taking longer.

If you're comparing to VADPro from some time ago, I don't use it often enough to tell if anything changed.

It's also possible you got a different GPU type since there's a few that can be assigned to you and they would have different performance.

If you're comparing to VADPro from some time ago, I don't use it often enough to tell if anything changed.

It's also possible you got a different GPU type since there's a few that can be assigned to you and they would have different performance.

Hello all,

FYI, an updated version of DeepSeek V3 has been released on the website and API.

I used the new version with my go-to test subtitle, DDB-271 transcribed using WhisperWithVAD Pro, and I think the updated version does better translation.

I've attached a zip with two translated and the transcribed SRTs. One translated using the original DeepSeek V3 and one using the updated V3-0324. Neither translated version has any manual editing or cleanup.

Here is the changelog:

FYI, an updated version of DeepSeek V3 has been released on the website and API.

I used the new version with my go-to test subtitle, DDB-271 transcribed using WhisperWithVAD Pro, and I think the updated version does better translation.

I've attached a zip with two translated and the transcribed SRTs. One translated using the original DeepSeek V3 and one using the updated V3-0324. Neither translated version has any manual editing or cleanup.

Here is the changelog:

Attachments

Hello all, I'm using WhisperWithVAD_PRO and getting the following error:

"<span><span>ValueError: numpy.dtype size changed, may indicate</span><span> binary incompatibility. Expected </span><span>96</span> <span>from</span><span> C header, got </span><span>88</span> <span>from</span><span> PyObject</span></span>"

It feels like the same issue when they changed the version of torch.

"<span><span>ValueError: numpy.dtype size changed, may indicate</span><span> binary incompatibility. Expected </span><span>96</span> <span>from</span><span> C header, got </span><span>88</span> <span>from</span><span> PyObject</span></span>"

It feels like the same issue when they changed the version of torch.

Hello all, I'm using WhisperWithVAD_PRO and getting the following error:

"ValueError: numpy.dtype size changed, may indicate binary incompatibility. Expected 96 from C header, got 88 from PyObject"

It feels like the same issue when they changed the version of torch.

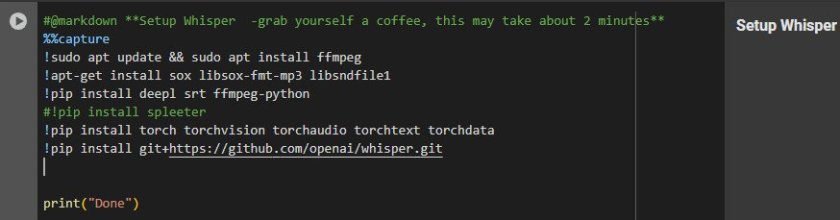

As a workaround for now, you can comment out(add a # at the beginning of the line to disable it) the spleeter installation line in the "setup whisper" section(click show code to edit) and it should look like this:

You need to run the code(press the play button) after you've done this. If you've already ran the code, you'll need to run it again.

Spleeter is used to separate the voice from the background noise.

It's not used by default so unless you set "source_separation" to True(meaning you check the checkbox next to it), it won't change anything(and if you do enable it after disabling the spleeter install, it will give an error).

Last edited:

As a workaround for now, you can comment out(add a # at the beginning of the line to disable it) the spleeter installation line in the "setup whisper" section(click show code to edit) and it should look like this:

View attachment 3650116

You need to run the code(press the play button) after you've done this. If you've already ran the code, you'll need to run it again.

Spleeter is used to separate the voice from the background noise.

It's not used by default so unless you set "source_separation" to True(meaning you check the checkbox next to it), it won't change anything(and if you do enable it after disabling the spleeter install, it will give an error).

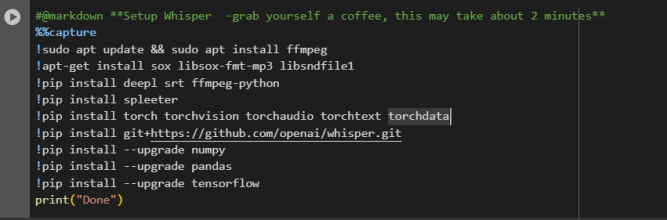

Upgrading numpy, pandas and tensorflow also seems to resolve the error. Not sure if it's actually necessary to upgrade all of them.

Like so:

SamKooK and Novus.Toto, thank you so much. Both solutions worked, really appreciate the prompt replies and visual aids!

I tried this but got this error. I'm not a computer whiz. Any ideas?Upgrading numpy, pandas and tensorflow also seems to resolve the error. Not sure if it's actually necessary to upgrade all of them.

Like so:

View attachment 3650292

NameError Traceback (most recent call last)

<ipython-input-3-754953b95a0a> in <cell line: 0>()

35 out_path = os.path.splitext(audio_path)[0] + ".srt"

36 out_path_pre = os.path.splitext(audio_path)[0] + "_Untranslated.srt"

---> 37 if source_separation:

38 print("Separating vocals...")

39 get_ipython().system('ffprobe -i "{audio_path}" -show_entries format=duration -v quiet -of csv="p=0" > input_length')

NameError: name 'source_separation' is not defined

That error is because the script can't see the variables from the first code block, you just have to execute that, at the top of the page.

With an extra step of restart session, you can avoid the "numpy.dtype size changed" error without needing to upgrade those packages. The workflow is mount Google Drive, setup, click runtime>restart session, set parameters, then run Whisper.

In WhisperPro, I use aninitial_prompt = "これは日本のアダルトビデオです。喘ぎ声、卑猥な表現、スラング(イク、中出し、気持ちいい、お願い、イッちゃうなど)を正確に文字起こししてください。" It translates to: This is a Japanese adult video. Please accurately transcribe the moans, obscene expressions, and slang (such as iku, nakadashi, kimochiii, onegai, icchau, etc.). I do find that it improves the transcription.

Updated! I got AI to refine the prompt. Now I use initial_prompt = "これは日本AV。登場人物の会話、喘ぎ声、卑猥なスラングや独り言を、一言一句省略せずに全て正確に文字起こししてください。特に次の言葉や類似表現に注意してください:ああん、イク、イッちゃう、中出し、気持ちいい、お願い、ダメ、もっと、やばい、突いて、んっ、ふぅ、ちんちん、マンコ、ザーメンなど"

In WhisperPro, I use an

Updated! I got AI to refine the prompt. Now I use initial_prompt = "これは日本AV。登場人物の会話、喘ぎ声、卑猥なスラングや独り言を、一言一句省略せずに全て正確に文字起こししてください。特に次の言葉や類似表現に注意してください:ああん、イク、イッちゃう、中出し、気持ちいい、お願い、ダメ、もっと、やばい、突いて、んっ、ふぅ、ちんちん、マンコ、ザーメンなど"

Last edited:

Gemini Pro helped me add checkpoint resume functionality to Mei2's WhisperWithVAD_pro Colab code. Now it saves a checkpoint file and partial srt periodically. Ever been frustrated when you reached the time limit and all progress is lost? If you crash from memory issues, reach the time limit (probably a little over 4 hours per day) or close your tab, don't worry. Use a different Google account or just wait the next day, and click Run All, if it sees those two files in WhisperJAV folder then it will resume. Check it out and see what settings I like to use. Note I removed Spleeter and Deepl functionality, things I didn't use.

Edit: It needs an audio_folder path instead of a single audio file path. It looks for every m4a, wav, flac, or mp3 in the audio_folder "/content/drive/My Drive/WhisperJAV"

Edit2: I reuploaded it. I incorrectly loaded the vad and whisper models in the loop. This caused out of memory issues for the next audio file. I moved the loading of the models to outside of the loop and added cleanup memory after completion of each file.

Edit: It needs an audio_folder path instead of a single audio file path. It looks for every m4a, wav, flac, or mp3 in the audio_folder "/content/drive/My Drive/WhisperJAV"

Edit2: I reuploaded it. I incorrectly loaded the vad and whisper models in the loop. This caused out of memory issues for the next audio file. I moved the loading of the models to outside of the loop and added cleanup memory after completion of each file.

Attachments

Last edited:

So does that mean I don’t need to worry if Colab crashes or restarts? It’s really frustrating when it hits the daily usage limit and I have to start over just to get different results. Does it save the project automatically?Gemini Pro helped me add checkpoint resume functionality to Mei2's WhisperWithVAD_pro Colab code. Now it saves a checkpoint file and partial srt periodically. Ever been frustrated when you reached the time limit and all progress is lost? If you crash from memory issues, reach the time limit (probably a little over 4 hours per day) or close your tab, don't worry. Use a different Google account or just wait the next day, and click Run All, if it sees those two files in WhisperJAV folder then it will resume. Check it out and see what settings I like to use. Note I removed Spleeter and Deepl functionality, things I didn't use.

Yes, all results are saved in the partial.srt and ckpt.json found inside /WhisperJAV. Don't move or edit those files as it's running.So does that mean I don’t need to worry if Colab crashes or restarts? It’s really frustrating when it hits the daily usage limit and I have to start over just to get different results. Does it save the project automatically?

Thank you!Yes, all results are saved in the partial.srt and ckpt.json found inside /WhisperJAV. Don't move or edit those files as it's running.

What's your edit? I'm using the one that you've uploaded beforeI reuploaded it. See my edit.

Similar threads

- Replies

- 1

- Views

- 370

- Replies

- 134

- Views

- 41K

- Replies

- 4

- Views

- 830

- Replies

- 184

- Views

- 36K