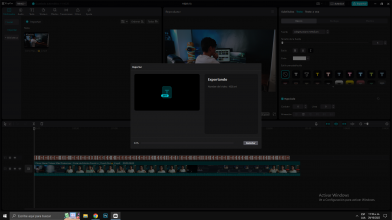

maybe someone can compare my MIAA-698 subtitle from CAPCUT windows version link of capcut, the problem of this subtitle only the sync of the sub because I split the movie into 60 minutes and use deepl for translate from Japan to English. please check it

akiba resident JAV subtitlers & subtitle talk★NOT A SUB REQUEST THREAD★

- Thread starter desioner

- Start date

-

Akiba-Online is sponsored by FileJoker.

FileJoker is a required filehost for all new posts and content replies in the Direct Downloads subforums.

Failure to include FileJoker links for Direct Download posts will result in deletion of your posts or worse.

For more information see this thread.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The 413 error should be fixed now. It'll also save the original Japanese output in a separate file when using DeepL (not possible when Whisper translates it).

Vosk is based on Kaldi. It uses an acoustic model that looks at each fraction of a second, and predicts individual phonemes. Then a language model guesses which words those phonemes form. Since you know where each phoneme starts, it's easy to create accurate timestamps.

Whisper doesn't have an acoustic model. It takes an entire 30-second slice of audio, and tries to predict the subtitles, timestamps included. It doesn't actually understand what timestamps are; it's just been trained on lots of text that contains them.

Thanks!!! Will give the new version a try. Meanwhile, I upgraded my colab to pay-as-you-go, I get T4 GPU, but I don't see any viisble improvement in speed/performance. Your code to check on GPU before running was quite helpful.

Quick question: would changing the chunk-threshold make any difference in quality of the output?

Yes it does, it changes the translation. I tested it out and found chunk2 or chunk3 to be slightly better.Quick question: would changing the chunk-threshold make any difference in quality of the output?

You should be aware it gives random translation each run with speech that are not very clear. I am only looking at the sentences that don't change between each run. Chunk2 and chunk3 gives the same result, while chunk1 changes. A few sentences are not the same and not as good as chunk2 and 3.

The 413 error should be fixed now. It'll also save the original Japanese output in a separate file when using DeepL (not possible when Whisper translates it).

Thanks @Non_Entity. The new changes work very well. Juts in case, if you feel like adding more features, here are couple of thoughts:

- would be nice to be able to process multiple files -batch process;

- would be nice if DeepL API is called only for the text of the subs--reduce cost of DeepL by reducing the number of characters sent;

cheers

PS. by pure luck Colabpro gave me an A100 GPU during one of my runs. However, in my experience Whisper performance didn't change at all. It seems that more power/VRAM doesn't change the speed. It seems that a T4 GPU is an optimal cost/performance setup

Thanks @Non_Entity. The new changes work very well. Juts in case, if you feel like adding more features, here are couple of thoughts:

- would be nice to be able to process multiple files -batch process;

- would be nice if DeepL API is called only for the text of the subs--reduce cost of DeepL by reducing the number of characters sent;

cheers

PS. by pure luck Colabpro gave me an A100 GPU during one of my runs. However, in my experience Whisper performance didn't change at all. It seems that more power/VRAM doesn't change the speed. It seems that a T4 GPU is an optimal cost/performance setup

Is it possible to run it offline without needing to upload the mp3 every time

Is it possible to run it offline without needing to upload the mp3 every time

Do you mean to package the code to run locally? The large Japanese model seems to need more than 12GB of GPU VRAM. At least I was not able to run it locally --I got out of memory runtime error. It would be nice if OpenAI would optimise the model to work on lower specs.

In case of colab, I found it easier to link my Drive to colab than to upload local files. The bad thing is that wih every new session I have to reconnect my Drive (I undertsand there a way to automate it but I haven't tried). The good thing is that the transfers to Colab are much faster, I believe.

Last edited:

Oh i see. Yea I'm not sure how it works. Not a programmer.Do you mean to package the code to run locally? The large Japanese model seems to need more than 12GB of GPU VRAM. At least I was not able to run it locally --I got out of memory runtime error. It would be nice if OpenAI would optimise the model to work on lower specs.

In case of colab, I found it easier to link my Drive to colab than to upload local files. The bad thing is that wih every new session I have to reconnect my Drive (I undertsand there a way to automate it but I haven't tried). The good thing is that the transfers to Colab are much faster, I believe.

Also not sure how google drive works, don't you have to upload to google anyways?

Oh i see. Yea I'm not sure how it works. Not a programmer.

Also not sure how google drive works, don't you have to upload to google anyways?

Yes the upload will be to Drive instead of colab. The way I do it is (and I hope others can review and suggest easier ways please): I extract and save mp3s of movies that I want to sub into one folder. I keep that folder in sync with my drive {Google Drive App). This way I can run Whisper with different parameters on the same file without needing to upload again between sessions.

I've noticed the way you split lines makes a difference with DeepL. Translating each line separately removes context, but running them together makes it blend the lines and repeat itself. See avatarthe's tests:

The best fix I've found is putting quotes between the lines. 「」 and "" work about equally well, and remove most (not all) of the repetitions.

I've added this to the notebook. My earlier fix was using a sliding window, which also worked, but tripled the API cost.

Because eight years ago, my wife left me for a young man she worked with part-time.

She left me for a young man she was working with.

Since then, I've been the mainstay of Araike for three years.

I've been the mainstay of Araike's household, raising our three children.

The best fix I've found is putting quotes between the lines. 「」 and "" work about equally well, and remove most (not all) of the repetitions.

I've added this to the notebook. My earlier fix was using a sliding window, which also worked, but tripled the API cost.

I love that you keep updating this. Really appreciate it.I've noticed the way you split lines makes a difference with DeepL. Translating each line separately removes context, but running them together makes it blend the lines and repeat itself. See avatarthe's tests:

The best fix I've found is putting quotes between the lines. 「」 and "" work about equally well, and remove most (not all) of the repetitions.

I've added this to the notebook. My earlier fix was using a sliding window, which also worked, but tripled the API cost.

Is there any chance you can add a queue feature to it? For example, giving us more input fields for the audio path, maybe 4 more. So when it finishes with one, it will move to the next one automatically.

So I downloaded OYC-036 years ago, must have been at least 5 years back. Was watching it today and noticed it is a hardsub with Asian language subtitles. Odd as that's the only one I've run into. Anyone know which Asian language this is?

Also, anyone know what software can be used to OCR this and turn it into a soft sub?

Also, anyone know what software can be used to OCR this and turn it into a soft sub?

It's chinese (traditional).So I downloaded OYC-036 years ago, must have been at least 5 years back. Was watching it today and noticed it is a hardsub with Asian language subtitles. Odd as that's the only one I've run into. Anyone know which Asian language this is?

View attachment 3062637

Also, anyone know what software can be used to OCR this and turn it into a soft sub?

And the best way to OCR this into soft sub is using VideoSubFinder. see tutorial here

accurate translations ??How are y'all able to get accurate subtitle translations from Pytranscribe? Is there a certain site you download the movies from? I upload the entire movie or the mp3 audio file of the movie and i get inaccurate translations. Is it something i'm doing wrong ?

oh.

if you talking about how come people can got more line of subtitle and upload it on the net then so i can tell you a little bit

1 some of subtitle is not translating ,,, its just kind of .... add more line in the files. ( their imagine , guessing )

2 how the Pytranscribe?

if the dialog in movies are clear enough ,,,, then its work ....you will got more lines in the file

the main problem is some of the movies have so many sound ,,, music ,people ,,,and its not loud enough

you need some audio-program for booting the sound s level ..(audacity is free )

accurate translations ?? (for me i said its better than nothing ))

clear sound , increase the volume of the dialogs ... just try to do it ..

thats all i know now

It's like Maload said. The clearer the movie dialog, the better pyTranscriber works. And along with Audacity it's great. Remember pytranscriber will give you timing code and some subs at the very least. The rest is up youHow are y'all able to get accurate subtitle translations from Pytranscribe? Is there a certain site you download the movies from? I upload the entire movie or the mp3 audio file of the movie and i get inaccurate translations. Is it something i'm doing wrong ?

You can use both the .mp4(audio/video file) or mp3(audio file) with pyTranscriber. I think mp3 works best because with Audacity you can make the audio a little better.

And remember, 90% of JAV dialog is the same words/phrases.

You should be prepare to guess some scenes as the music and other people talking is just too hard to understand.. unless you know Japanese.

If it's girls-- and they're at school-- and it's just background talk-- then just say, Girl A: How was the exam? Or something like that. Girl B: Um, I didn't score well. Or something like that.

But look/listen for the main star(s) to talk louder OR whoever talks the loudest. That's your focus when everyone talking at once. OR just skip it. Sometimes its just better than stressing

Good, sound advice. (Pun intended.) Well done. Thanks for sharing.It's like Maload said. The clearer the movie dialog, the better pyTranscriber works. And along with Audacity it's great. Remember pytranscriber will give you timing code and some subs at the very least. The rest is up you

You can use both the .mp4(audio/video file) or mp3(audio file) with pyTranscriber. I think mp3 works best because with Audacity you can make the audio a little better.

And remember, 90% of JAV dialog is the same words/phrases.

You should be prepare to guess some scenes as the music and other people talking is just too hard to understand.. unless you know Japanese.

If it's girls-- and they're at school-- and it's just background talk-- then just say, Girl A: How was the exam? Or something like that. Girl B: Um, I didn't score well. Or something like that.

But look/listen for the main star(s) to talk louder OR whoever talks the loudest. That's your focus when everyone talking at once. OR just skip it. Sometimes its just better than stressing

Thank you my friend. It's one way that I think truly works and of course using your skills would greatly benefit any subbing jobGood, sound advice. (Pun intended.) Well done. Thanks for sharing.

Hello, could you help me, how did you download the capcut .srt file? I can't find that option or how to extract the subtitle to use.maybe someone can compare my MIAA-698 subtitle from CAPCUT windows version link of capcut, the problem of this subtitle only the sync of the sub because I split the movie into 60 minutes and use deepl for translate from Japan to English. please check it

I can't help you but YOU will need capcut, editor software, notepad, omg I forget.Hello, could you help me, how did you download the capcut .srt file? I can't find that option or how to extract the subtitle to use.

I'm not sure you want to go through all the trouble, honestly.

Capcut like pyTranscriber relies on dialog being clear. Moreso, I think. And it requires you to chop your video... ugh it's annoying to think about... anyways I tried it, wasn't a fan, and delete if off my phone.

But maybe it can work for you

Hello, but I want to know how to obtain the .srt file of the PC version, the PC voice to text option is very precise, but to download the .srt file I do not find the option, I leave an image of the PC version of capcut.I can't help you but YOU will need capcut, editor software, notepad, omg I forget.

I'm not sure you want to go through all the trouble, honestly.

Capcut like pyTranscriber relies on dialog being clear. Moreso, I think. And it requires you to chop your video... ugh it's annoying to think about... anyways I tried it, wasn't a fan, and delete if off my phone.

But maybe it can work for you

I'm trying to download only the .srt file option but it doesn't download, I don't know if it's only for paid versions, that's why my doubt.

This file is just for testing and I saw almost 99% translation effectiveness.

Attachments

Similar threads

- Replies

- 134

- Views

- 41K

- Replies

- 485

- Views

- 428K

- Replies

- 230

- Views

- 233K

- Replies

- 112

- Views

- 125K

- Replies

- 1

- Views

- 21K