@Casshern2 I just saw this thread. I've recently started playing with ComfyUI and various workflows. I have been looking for something that would produce good image-to-video results, and so far, I've only got some success with WAN 2.1. It seems like you've been using WAN 2.2, right? Are you using a workflow that integrates both high noise and low noise diffusion, or a single WAN 2.2 model? From my experience, WAN 2.1 is competent but not great at adhering to text prompts; been using a CFG value of 1.5, as suggested in the post I downloaded the workflow from. Anyway, I still have a lot to play around, but I'd like to try my hand at a working WAN 2.2 setup. Can you share some more insights of your workflow and the models you are using currently? Maybe I missed the post if you already did.

Thanks!

Hey, there, sorry for the late reply, was under the weather for a bit. Good deal on trying ComfyUI, hope you're having fun with it like I am. It can seem like a lot, going strictly by the visuals of it, but once you use a workflow or two as-is getting the mentioned models in place then watching a YouTube video here and there, it starts to get easier to manage and change. Don't be afraid to move things around for things to make sense for you, it's 100% flexible as you know, so all the workflows out there are just laid out however the author did it. I tend to move things around to make them try to fit on one screen.

I highly recommend watching this video from start to finish, multiple times maybe on one screen while you follow along on another if you have two. Real easy to follow; the guy's voice is friendly (probably AI generated but maybe not, I don't hear pauses or mispronunciations like you can on AI voices) and he takes you from start to finish creating a workflow yourself. This is what gave me better confidence and melted away any thought of "I can't do this stuff".

Wan 2.2 Image to Video for AI Commercials Comfyui GGUF

I haven't been active because of a stomach issue plus work, but as I'm typing this I have video a being generated with this image, it will most likely be done before I finish here.

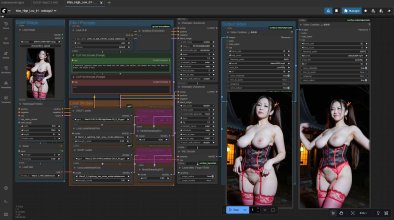

| I've attached the workflow I used. Again, I moved things around, maybe in a non-standard way, but only because I know now how things connect and in what order by all the videos and articles and looking at other workflows. This one is using the Wan 2.2 high and low noise models. I got spoiled with LTX early on, but they've languished and haven't produced things as fast as the Wan group and community for it. They finally have something new about to come out, but by now I've moved on. Yes, it is faster, but the quality and prompt adherence is way better with Wan (maybe just for now).

Here are the models/"lightning" loras I used. These are the GGUF versions, though, since the real models are over 20GB each and take a while to load for starters. If you're system can handle it you can try those, but you'll need to replace the GGUF Loaders with Checkpoint Loaders:

Wan2.2-I2V-A14B-HighNoise-Q4_K_M.gguf

Wan2.2-I2V-A14B-LowNoise-Q4_K_M.gguf

Wam2_2_Lightning_high_noise_model.safetensors

Wam2_2_Lightning_low_noise_model.safetensors

For the prompt, I keep it simple as I've read more than one person say for image-to-video you don't have to give so much detail on what is already on the image, it knows there is a person/woman there, no need to describe her in detail or the surroundings for that matter. That's more essential for text-to-video. |

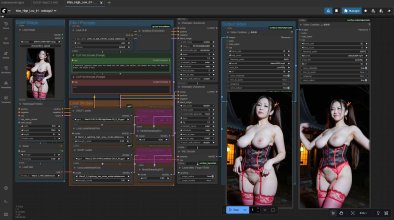

At any rate, video generation just finished. It was the first of the night so it included all the models loading, 30min in total, including model loading, so basically around 16min or so. Subsequent runs are faster. I'll be genuine and post what came out, they're not all golden, it can take a while to get what you want, or just to avoid odd things and anomalies that can and will pop up. She's a bit all over the place because I put too many actions in the prompt. Something I picked up to avoid slow motion videos after reading a question from

@kharo88 back in August. I'm sure if I remove something, she will behave haha.

EDIT: Wow, it actually took a bit longer to generate the new one (around 19min) without her hop in the prompt. I did notice it hung a bit on the prompt node, but not sure if that contributed to the length of the run that much. Oh well. What I learned to do pretty quick was not watch this paint dry, I either listen to music on YouTube or watch something and check back.

mega.nz